Newsroom

Six Months, Two Developers, 40 Languages: Inside Vocam's Rapid Build

Oct 27, 2025

5 min read

When Idil Doga Turkmen, a freshman at Caltech, posted a message in an Expo development Discord server this past June, she was looking for technical help with a language learning app she'd been building. Matheus Cruz, a software engineering intern in Brazil learning React Native, saw her message and thought: "She's building something interesting with the exact tech stack I'm trying to learn. Let's see how it goes."

Six months later, the two have never met in person. But together, they've built Vocam—a visual language learning app that uses computer vision to help users learn vocabulary by scanning objects around them. The app supports over 40 languages and is preparing for launch on Google Play Store.

Their story illustrates something profound: we're not just in an AI revolution. We're in a revolution of who gets to build with AI.

The Problem: Traditional Language Learning Fails Visual Learners

Idil's original inspiration was deceptively simple. While trying to learn French, she'd started putting sticky note labels on objects around her house—a tactile, visual approach to building vocabulary. Around the same time, she was playing Pokémon GO and appreciated how the game used your camera to interact with your environment.

"I thought, why not bring those two ideas and technology together?" Idil explains.

But the insight runs deeper than convenience. Idil, a visual learner herself, noticed that many of her neurodivergent friends learned the same way. When she researched further, she found evidence supporting what she'd observed in her own life: visual presentation of learning content is particularly effective in enhancing attention in students with ADHD, and children with ADHD generally benefit from visual learning—by watching or doing tasks in an activity-based, hands-on format rather than through lectures or memorization. Yet most language apps still rely heavily on text and audio-based methods.

Vocam's core feature is straightforward: point your phone's camera at any object, and the app identifies it and provides the translation in your target language. You build your vocabulary not from flashcards, but from your actual environment—your coffee mug, your backpack, the tree outside your window.

"It's more immersive," Idil notes. "Since it uses visuals and your environment, it can be integrated into everyday life in a way that's more impactful than only text-based learning."

The Meta-Story: AI Makes the Impossible Possible

Here's what makes Vocam remarkable: this project would have been technically impossible just a few years ago, and Idil and Matheus might never have been able to build it at all.

Consider the constraints they're working with:

- Two developers, both students

- Never met in person

- Limited budget (currently using free tiers of services)

- Building across 40+ languages

- Deploying computer vision AI on mobile devices

- Six months from concept to beta

"I don't know if object recognition was too advanced five years ago," Matheus reflects. "I think it was really bad, and the solution in itself wouldn't be great." The computer vision APIs they're using from Google can now identify objects with high confidence scores—performance that simply didn't exist at consumer-accessible price points even recently.

But the technical feasibility of image recognition is only part of the story. Both Idil and Matheus credit AI assistants like ChatGPT and Claude with enabling them to learn and build far beyond their initial skill levels.

"I was not familiar with working with this type of app building or object recognition," Idil admits. "AI helps a lot with learning along the way. But I realized I needed someone technical on the project."

That's where Matheus came in—but even he was learning as he went. "Basically, I try to translate what I know, because the programming logic is the same across languages. And if I need help, AI is just the best teacher in the world. You can just ask it and it will help, because programming is really straightforward."

They're using AI to build AI. The assistants help them write code, debug issues, and learn new frameworks in real-time. When they upgraded their Expo version recently and it broke much of the UI, they worked through the fixes over a weekend—a turnaround speed that would have been much slower without AI pair programming.

The Technical Challenges: When Your Notebook Might Be a Planner

Of course, language and visual recognition both involve inherent ambiguity. A spiral-bound book with blank pages could be a notebook, a journal, a planner, or a sketchbook depending on context and intent. How does Vocam handle this?

"We basically select the most general thing that you can capture via a photo," Matheus explains. The app also shows probability scores—"this picture has a 90% chance of being a notebook"—and gives users the power to confirm or override the identification.

Users can also manually add words they want to learn, getting the translation and pronunciation without taking a photo. This flexibility acknowledges that perfect computer vision isn't the goal—rather, it's about giving learners multiple pathways to build vocabulary in ways that work for them.

The team is working with free translation and example sentence APIs for now, which occasionally generate "weird sentences," as Idil puts it. As they grow, they plan to invest in more robust paid services. But for now, they're focused on the fundamentals: improving loading times, managing database connections efficiently to stay within free tiers, and preparing for their Google Play Store launch.

What's Next: Community, Conversation, and Scale

Looking ahead, Idil and Matheus envision several major additions to Vocam:

Social features where users could interact with each other and share learning progress. An AI conversation partner that lets users practice the language they're learning with a chatbot tutor. Eventually, real-time recognition that wouldn't require taking photos—though both acknowledge that's a feature for a future version when they have more development capacity.

"I think the tech is already okay," Matheus notes pragmatically, "but our development should focus on other aspects for now. We are a team of two, so we don't have too much people."

The path to sustainability is also on their minds. "In a year, we'll hopefully have a lot of users, and then we won't be able to use free Supabase anymore," Matheus points out. "Our costs will really go up. It would be really bad if we haven't made a single dollar on it."

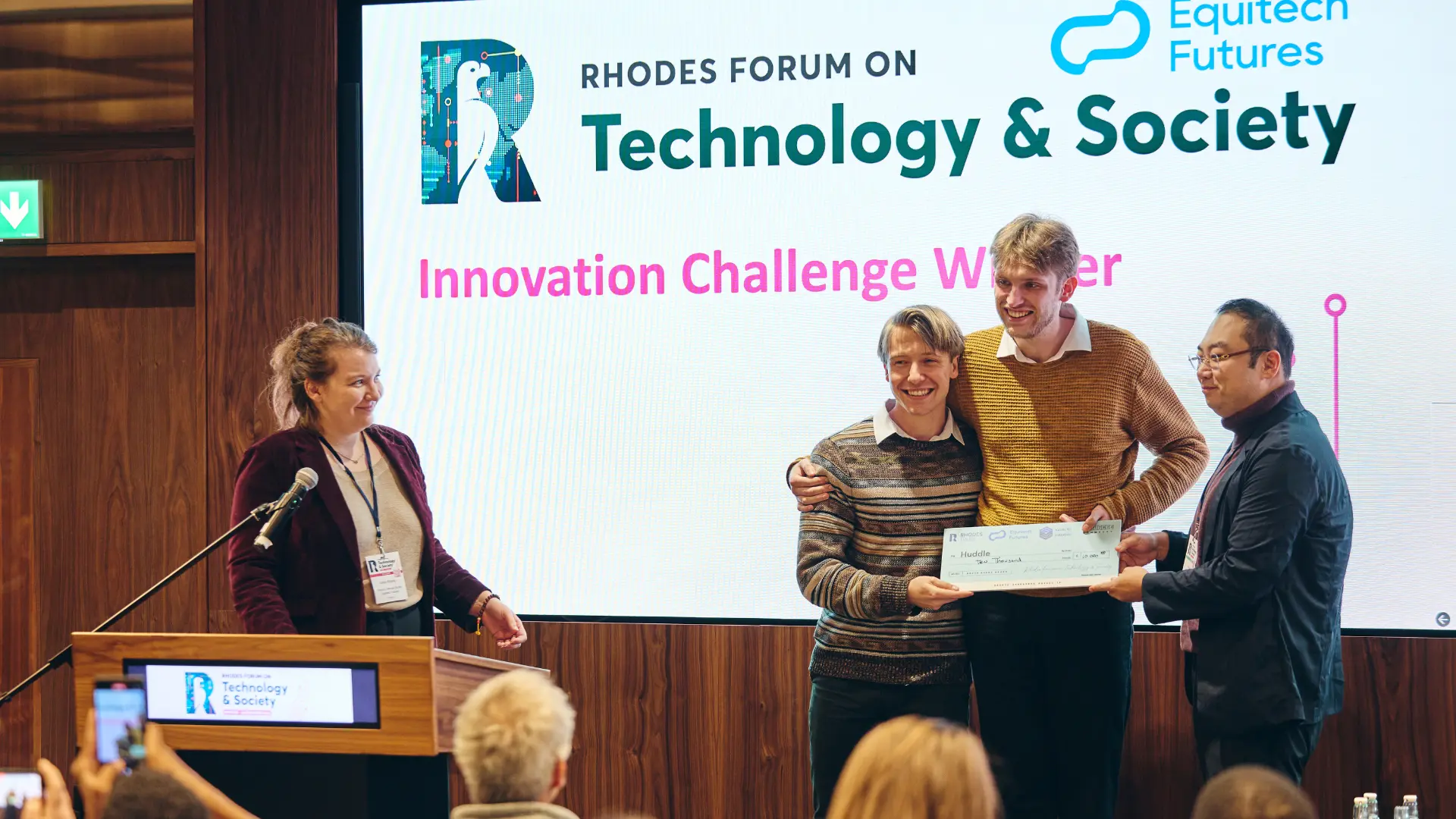

But first, they need to get to launch—and then to Oxford, where they'll meet in person for the first time at the Innovation Challenge showcase.

"Looking back from starting this in May, I wouldn't have ever thought it would be an actual app that I could use," Idil reflects. "Seeing that icon on my phone is really rewarding."

Vocam is a finalist in the Kevin Xu Innovation Challenge, part of the Rhodes Forum on Technology & Society.

Want to try Vocam? The team is currently building their beta testing list. You can reach out to Idil at vocam.app@gmail.com to join the early access program.

More articles

.webp)

Newsroom

No Innovator Left Behind: How Equitech Futures uses philanthropic capital to maximize impact

Newsroom

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)